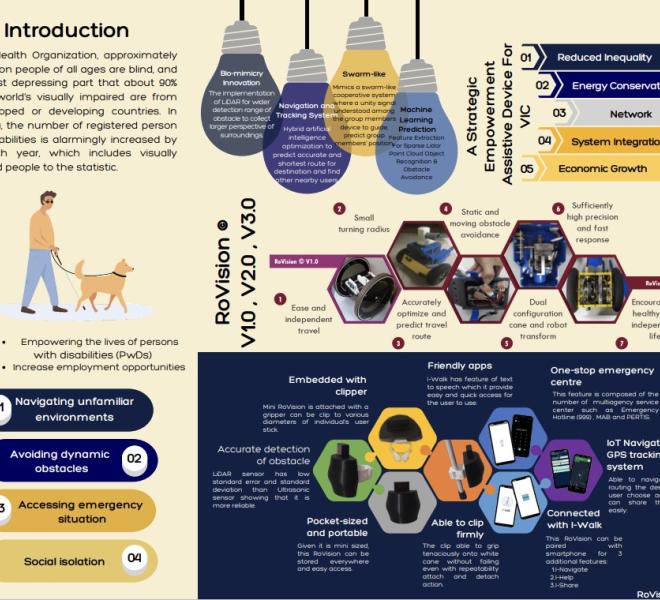

Miniaturise RoVision: A Revolutionised Travelling Aid for Visually Impaired Community (VIC)

Description

The Miniaturise RoVision V3.0 is equipped with high-tech Bio inspired artificial intelligence based system. Where the latest design is aspired by the pedal-muscle structure of Escargot and exploited to embedded clip while the Rotatable Rovision to get wider frame of vision using Lidar sensor for object detection is exploited from vision of escargot from the two tentacle eyes. The capability is strengthen with the use of hybrid ant colony optimisation and Particle swarm optimisation to boost up the merits of both algorithm combines to predict not only the accurate but shortest route for travelling. Inspired from the fascinating observation of swarms of social insects the hybrid AI is also used for a localised swarm-like cooperative system. This would be useful for the targeted user to travel in a larger group with assurance that they know their company and also for safety precautions. Inspired from the fascinating observation of swarms of social insects the hybrid AI is also used for a localised swarm-like cooperative system. This would be useful for the targeted user to travel in a larger group with assurance that they know their company and also for safety precautions. For example here, 4 visually impaired individuals started travel from different starting point, where multiple RoVisions can effectively communicate with each other to find the best routes to reach to the destination point together. This is very impactful to solve issue of traveling at unfamiliar environments and positively could boost up confidence and avoid social isolation.

Highlights

Another challenge is the ability to avoid dynamic obstacles. Where Static obstacles may have less causality compared to dynamic obstacles. Our solution aims to quickly and accurately alert to surroundings using Feature Extraction For Sparse Lidar Point Cloud Object Recognition & Obstacle Avoidance. With combination methods of Clustered Extraction (CE) and Centroid Based Clustered Extraction (CBCE). The experimental data used is concentrated on moving object detection are human, motorcyclist, car with 4 different pose orientation headings (front, right, left, back). This figure shows - Point cloud data examples of human, motorcyclist and car showing raw data point cloud, followed by filter process and point cloud post clustering. We will start with the Clustered extraction (CE) method. The LiDAR point cloud gives output in Cartesian coordinate system with the x, y & z origin. The data, are extracted into three parts. The first part is alpha α, which stores the values of width (w), length (l), height (h) of the object, and the number of points in the cluster (N). The second part is array beta β which stores the number of elements within the segregated intervals. The third part is the minimum and maximum value of each axis in the clusters, denoted by gamma γ. The stored elements of the coordinate and the number of elements within a determined interval, will be the input for the classifier detection. Centroid Based Clustered Extraction (CBCE) method From here onwards, two additional features are extracted for Centroid Based Clustered Extraction (CBCE) method denoted by delta δ (for features related to density to centroid height ratio (r=h) and epsilon ε (for features related to density to volume ratio (r=V). For each part, the centroid height is calculated as a reference point. From the extracted features, classifications are done with 75%-25% split of training and testing data. The collective features are optimised with CNN with the number of hidden layers is ranged between 1 to 10, with the batch size of 10, 1000 number of epochs, and an activation function of Rectified Linear Units (ReLUs) and Softmax function. Equipped with feature extraction and convolutional neural network (CNN) object classifier, RoVision has the capability to detect both static and moving objects with 97% accuracy that increases the safety level and group interaction capability and hence established sustainable wellbeing and high economy lifestyle. The RoVision v1.0 and v2.0 system provides modular subsystems with dual configuration cane and robot transform comprises of, 1) swarm-like interactive AI optimisation for group communication, location detection and route prediction. 2) A small turning radius with obstacle avoidance capability, 3) Accurately optimize and predict travel route and sufficiently high precision and fast response. Leading towards encouraging a healthy and independent life. Through RnD and improvements, Rovision V3.0 technology innovation is equipped with tremendous capability of 1) accurate obstacle detection, 2)embedded clipper with pocket sized system which able to grip firmly on the cane, 3) it is embedded with friendly i-WALK apps and GPS tracking connectivity which translated to a one-stop emergency centre solution.

Contact Person/Inventor

| Name | Contact Phone | |

|---|---|---|

| Ami Nordin Bin Ismail | amiluq@iium.edu.my | 60192736483 |

Award

| Award Title | Award Achievement | Award Year Received |

|---|---|---|

| Malaysian Technology Expo | MTE 2023 | 2023 |

Comment